Consumer Behavior

Illusory Truth, Lies, and Political Propaganda

Repeat a lie often enough and people will come to believe it.

Updated March 21, 2024 Reviewed by Abigail Fagan

This is part 1 of a 2-part series on the illusory truth effect and its use in political propaganda.

“If everybody always lies to you, the consequence is not that you believe the lies, but rather that nobody believes anything any longer… And a people that no longer can believe anything cannot make up its mind. It is deprived not only of its capacity to act but also of its capacity to think and to judge. And with such a people you can then do what you please.”

—Hannah Arendt

“The truth is always something that is told, not something that is known. If there were no speaking or writing, there would be no truth about anything. There would only be what is.”

—Susan Sontag, The Benefactor

The Illusory Truth Effect

Many of us are familiar with the quotation, “Repeat a lie often enough and people will eventually come to believe it.”

Not ironically, the adage — often attributed to the infamous Nazi Joseph Goebbels — is true and has been validated by decades of research on what psychology calls the “illusory truth effect.” First described in a 1977 study by Temple University psychologist Dr. Lynn Hasher and her colleagues, the illusory truth effect occurs when repeating a statement increases the belief that it’s true even when the statement is actually false.1

Subsequent research has expanded what we know about the illusory truth effect. For example, the effect doesn’t only occur through repetition but can happen through any process that increases familiarity with a statement or the ease by which it’s processed by the brain (what psychologists in this context refer to as a statement’s “fluency”). For example, the perceived truth of written statements can be increased by presenting them in bold, high-contrast fonts2 or when aphorisms are expressed as a rhyme.3

According to a 2010 meta-analytic review of the truth effect (which applies to both true and false statements),4 while the perceived credibility of a statement’s source increases perceptions of truth as we might expect, the truth effect persists even when sources are thought to be unreliable and especially when the source of the statement is unclear. In other words, while we typically evaluate a statement’s truth based on the trustworthiness of the source, repeated exposure to both information and misinformation increases the sense that it’s true, regardless of the source’s credibility.

The illusory truth effect tends to be strongest when statements are related to a subject about which we believe ourselves to be knowledgeable,5 and when statements are ambiguous such that they aren’t obviously true or false at first glance.4 It can also occur with statements (and newspaper headlines) that are framed as questions (e.g. “Is President Obama a Muslim?”), something called the “innuendo effect."6

But one of the most striking features of the illusory truth effect is that it can occur despite prior knowledge that a statement is false7 as well as in the presence of real “fake news” headlines that are “entirely fabricated…stories that, given some reflection, people probably know are untrue.”8 It can even occur despite exposure to “fake news” headlines that run against one's party affiliation. For example, repeated exposure to a headline like “Obama Was Going to Castro’s Funeral—Until Trump Told Him This” increases perceptions of truth not only for Republicans but Democrats as well.8 And so, the illusory truth effect occurs even when we know, or want to know, better.

In summary, psychology research has shown that any process that increases familiarity with false information — through repeated exposure or otherwise — can increase our perception that the information is true. This illusory truth effect can occur despite being aware that the source of a statement is unreliable, despite previously knowing that the information is false, and despite it contradicting our own political affiliation’s “party line.”

Illusory Truth and Political Propaganda

In the current “post-truth” era of “fake news” and “alternative facts” (see my previous blog posts, “Fake News, Echo Chambers & Filter Bubbles: A Survival Guide” and “Psychology, Gullibility, and the Business of Fake News”), the illusory truth effect is especially relevant and deserves to be a household word.

That said, the use of repetition and familiarity to increase popular belief and to influence behavior is hardly a new phenomenon. The use of catchy slogans or songs, regardless of their veracity, has always been a standard and effective component of advertising. For example, “puffing” is an advertising term that refers to baseless claims about a product that, despite leaving a company liable to false advertising litigation, no doubt often remains profitable in the long run.

In politics, repeating misinformation and outright lies have been powerful tools to sway public opinion long before the illusory truth effect was ever demonstrated in a psychology experiment. In Nazi Germany, Adolf Hitler famously wrote about the ability to use the “big lie” — a lie so outlandish that it would be believed on the grounds that no one would think anyone would lie so boldly — as a tool of political propaganda. Goebbels, the head of Nazi propaganda quoted earlier, is said to have likewise favored the repetition of lies in order to sell the public on Hitler and the Nazi party’s greatness.

Consequently, the political philosopher Hannah Arendt characterized the effectiveness of lying as a political tool in her seminal post-war classic The Origins of Totalitarianism:

“Society is always prone to accept a person offhand for what he pretends to be, so that a crackpot posing as a genius always has a certain chance to be believed. In modern society, with its characteristic lack of discerning judgment, this tendency is strengthened, so that someone who not only holds opinions but also presents them in a tone of unshakable conviction will not so easily forfeit his prestige, no matter how many times he has been demonstrably wrong. Hitler, who knew the modern chaos of opinions from first-hand experience, discovered that the helpless seesawing between various opinions and ‘the conviction that everything is balderdash’ could best be avoided by adhering to one of the many current opinions with ‘unbendable consistency.’

…the propaganda of totalitarian movements which precede and accompany totalitarian regimes is invariably as frank as it is mendacious, and would-be totalitarian rules usually start their careers by boasting of their past crimes and carefully outlining their future ones.”

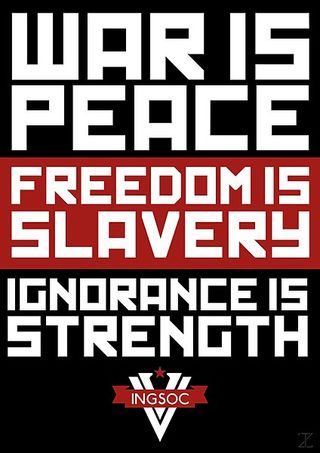

In the novel 1984, George Orwell likewise portrayed a fictitious dystopia inspired by the Soviet Union under Stalin in which a totalitarian political party oppresses the public through “doublethink” propaganda epitomized in the slogan, “War is Peace, Freedom is Slavery, Ignorance is Strength.” “Doublethink,” Orwell wrote, consists of “the habit of impudently claiming that black is white, in contradiction to plain facts…it means also the ability to believe that black is white, and more to know that black is white, and to forget that one has ever believed the contrary.” In 1984, this is achieved through a constant contradiction of facts and revision of history to the point that people are left with little choice but to resign themselves to accept party propaganda:

“The party told you to reject the evidence of your eyes and ears. It was their final, most essential command.”

Needless to say, “doublethink,” along with Orwell’s description of “newspeak,” gave rise to the modern term “doublespeak” defined by Merriam-Webster as “language used to deceive usually through concealment or misrepresentation of truth.”

To learn more about how doublespeak and the illusory truth effect is exploited in modern politics, from Russia's "firehose of falsehood" to President Trump's "alternative facts," please continue reading "Illusory Truth, Lies, & Political Propaganda: Part 2."

References

1. Hasher L, Goldstein D, Topping T. Frequency and the conference of referential validity. Journal of Verbal Learning and Verbal Behavior 1977; 16:107-112.

2. Reber R, Schwarz N. Effects of perceptual fluency on judgments of truth. Consciousness and Cognition: An International Journal 1999; 8:338-342.

3. McGlone MS, Tofighbakhsh J. Birds of a feather flock conjointly (?): Rhyme as reason in aphorisms. Psychological Science 2000; 11:424-428.

4. Dechene A, Stahl C, Hansen J, Wanke M. The truth about the truth: a meta-analytic review of the truth effect. Personality and Social Psychology Review 2919; 14:238-257.

5. Arkes HR, Hackett C, Boehm L. The generality of the relation between familiarity and judged validity. Journal of Behavioral Decision Making 1989; 2:81-94.

6. Wegner DM, Wenzlaff R, Kerker RM, Beattie AE. Incrimination through innuendo: can media questions become public answers? Journal of Personality and Social Psychology 1981; 40:5:822-832.

7. Fazio LK, Brashier NM, Payne BK, Marsh EJ. Knowledge does not protect against illusory truth. Journal of Experimental Psychology: General 2015; 144:993-1002.

8. Pennycook G, Cannon TD, Rand DG. Prior exposure increases perceived accuracy of fake news. Journal of Experimental Psychology: General 2018; 147:1865-1880.