Coronavirus Disease 2019

Why Misinformation Goes Viral

Psychological factors affect the spread of misinformation during crises.

Posted August 12, 2020 Reviewed by Gary Drevitch

In the continued war on misinformation, LinkedIn and Facebook just removed millions of posts containing misinformation about the coronavirus. And it is believed that is but a fraction of the posts. When John Oliver tackled the flood of misinformation surrounding the COVID-19 pandemic, he mentioned the "proportionality bias" — that big events beg big explanations — to explain why misinformation, particularly conspiracy theories, have had a heyday with the current crisis. However, the science suggests that it is more than just the proportionality effect at play making this crisis exceptionally fertile grounds for seeds of misinformation.

Negativity Bias. For starters, our evolutionary heritage leads us generally to pay more attention to negative information than to positive information. This negativity bias was important to the survival of our species, as attending to the tiger prowling the encampment was more imperative than celebrating the latest birth. We see this reflected in our news which disproportionately features headlines about the latest travesty while feel-good stories are relegated to the back pages. And recent research has shown that this attention to negative news is evident at the physiological (and neurological) level across samples in 17 different countries.

Ultimately, this means that if something bad is happening, it's got our attention. A once-a-century pandemic killing hundreds of thousands certainly qualifies. Thus, already, we are on the outlook for information. Further, evidence suggests that negative information is viewed as more credible than positive information. So not only are we paying attention but we are also primed to believe it.

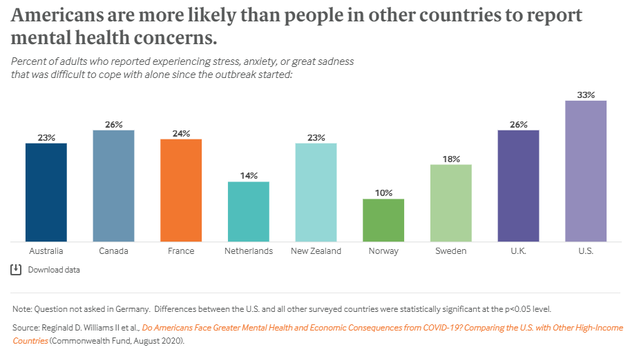

Social Risk Amplification. This negativity bias gets a boost when information is shared. In a recent interview, it was said that the spread of misinformation is like a "screwed up game of telephone." In fact, using these "diffusion chain experiments" is a common choice in experimental studies examining the transmission of information. In a 2015 study researchers had strings of 10 participants pass along information about the risks and benefits of a controversial drug (i.e., triclosan). Overall, all messages became shorter and increasingly inaccurate. However, by the end of the "diffusion chain" information about the benefits had been relatively lost whereas information about the risks continued to spread. Further, individual biases about risks led to the amplification of risks down the chain. A follow-up 2020 study showed that this amplification effect is even stronger when people are feeling stressed and unfortunately, new research shows that Americans are even more stressed about the coronavirus than other nations.

Dread Risks. As if that weren't enough to provide a megaphone for misinformation during stressful times, social risk amplification is even more likely when people are experiencing what are called dread risks, i.e., life-altering, disastrous, random, events that present a threat to mortality. In a 2018 study, researchers using an 8-person diffusion chain experiment paradigm randomly assigned groups to transmit low- or high-dread risk messages. As the message passed from person to person the high-dread chains became more and more negative in their transmission of the message relative to the low-dread chains, and those messages became more and more distorted. We saw a real-life example of this with the transmission of information about the Ebola outbreak in 2015 via Twitter and Facebook where the diffusion chains are no longer just 8 or 10 people long but spread across millions of users each with their own megaphones of various wattage.

Frustration with the Scientific Method. To further complicate matters, the current pandemic features a novel coronavirus. Meaning there wasn't a wealth of accurate information from science at the outset of the outbreak because how could there be? It was new. Scientists are racing to find answers. Science, however, is constrained by the scientific method which is much slower than the pace at which fearful stressed citizens want information. It takes considerable time, customarily, to build a scientific consensus. Thus, in this race for information, science often lags behind misinformation because the Twitter user recommending alcohol to combat COVID-19 is not similarly constrained.

This scientific method can further be frustrating to individuals when the process of replication and peer review kicks in and leads to the modification or retraction of findings. These updates are more likely to occur when there is a race for answers and thus usually meticulous methods are viewed as less important than expediency. When recommendations are altered based on new evidence, this is a sign that science is working. However, when sources who are supposed to "have the facts" change course, it can result in a tainted truth effect damaging trust in those sources. Meanwhile, our Facebook friends are not held to the same standard.

Fear and Shallow Processing. Consequently, the wrong information, if repeated and said with certainty, can be more persuasive than the latest science, especially when we don't take the time to process messages (e.g., read beyond the headline). Shallow processing is even more likely on the internet, where information flies by at the speed of a scroll and among people who are afraid such that they are looking for any type of action to protect themselves.

Due to this perfect storm of contributing factors we are seeing the spread of misinformation take on pandemic proportions (now available in 25 languages). Scientists often feel like they are combating two viruses. As citizens wishing to avoid infection, we need to practice good information hygiene in addition to good personal hygiene—starting with recognizing some of the signs that something you encounter might be misinformation, just as you would be on high alert when you hear someone cough or sneeze. Further, engage in basic fact-checking. Be the investigator, find the source of the information, and weigh the evidence supporting their claims. If you don't have the time to do the investigation, do not share. You could save a life by stopping the spread of misinformation.