Artificial Intelligence

Advanced AI May Be Coming Soon: What Can We Do?

Insights from "Uncontrollable: The Threat of Artificial Superintelligence."

Posted March 12, 2024 Reviewed by Michelle Quirk

Key points

- Advanced AI may be coming much sooner than we expect.

- There is a very significant risk that AI will change our lives to be worse.

- There are several changes that we can make as a society and as individuals for safe, responsible development.

“The unthinkable becomes possible. The possible becomes actual. The actual becomes ordinary and then fades into the background of our lives.” –Darren McKee

I recently read Uncontrollable: The Threat of Artificial Superintelligence and the Race to Save the World by Darren McKee. It’s an excellent book and my top recommendation for a quick introduction to the risks of artificial intelligence (AI). Here are some of the key insights and messages.

Advanced AI may be coming much sooner than we expect.

McKee begins by discussing the speed at which AI is advancing and gives some examples of cases where we underestimated how quickly new technology would disseminate.

The airplane is a great example. In October 1903, an article in the New York Times predicted a flying machine might be one million to ten million years away. Two months later, in 1903, the Wright brothers made the first successful airplane flight. Just 66 years later, in 1969, the Concorde made its first supersonic flight. Being caught off-guard by technological progress wasn't limited to outside commentators. Even Wilbur Wright, who co-created flight in 1903, highly underestimated progress, saying, “I confess that in 1901, I said to my brother Orville that man would not fly for 50 years.”

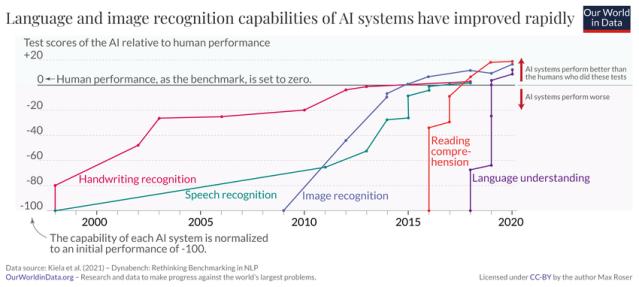

In less than a century, we went from people predicting that we wouldn’t have flight for a million years to having planes that could fly faster than the speed of sound: an incredible rate of progress. It is hard to make an exact comparison between progress in flight and AI, but by any metric, AI is progressing extremely fast. Many experts and forecasting platforms estimate that we will have human-level AI within the next few decades, if not sooner. The chart below from Our World In Data gives a good sense of the speed of current progress.

In many domains, we have had huge improvements in performance in relatively short periods of time. In just a few years, we have gone from AI performing worse than humans on many benchmarks to significantly outperforming humans. If you have been following the news, you will be aware that these changes are showing no sign of slowing down—tools such as OpenAI’s SORA continue to demonstrate new breakthroughs in performance.

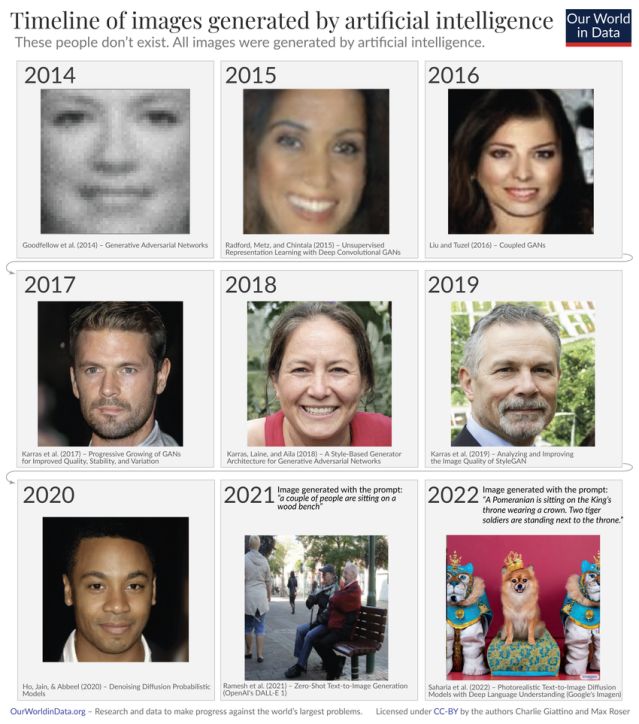

Seeing this in practice is perhaps even more impressive. For instance, the image below shows how text-to-image generation has improved over 8 years, going from clearly computer-generated and low-quality, to photorealistic and accurate.

The progress is part of the reason why many experts and prediction platforms predict that we will have transformative AI in the next few decades. For instance, one of the most recent surveys finds that experts assess the chance of unaided machines outperforming humans in every possible task as being 10 percent by 2027 and 50 percent by 2047. Notably, the second estimate has moved forward 13 years since the previous year's survey, which shows how progress has increasingly sped up.

AI may change our lives more than we can conceive.

The book discusses that one of the biggest implications of advanced AI is that it will change our lives in ways that we can't even imagine. McKee gives some really excellent examples of how science and technology have had a dramatic impact on our lives.

Consider music. In the 1860s, most people would have had no idea what you were talking about if you asked them to listen to recorded music. It would have been an incredible, inconceivable luxury to have music available at all times, let alone to be getting recommendations, or able to share it easily with friends. Of course, this is also true of almost everything we have and do now; technologies such as Wi-Fi, transistors, corrective lenses, computers, and mobile phones were always possible with existing material but not conceived or achieved until very recently.

Additionally, there have always been big changes in one time period that would have been inconceivable to those from an earlier time. Our societies today are quite exceptional by historical standards. This is mostly because of the Industrial Revolution. If you lived any time before this, you would have had a very different expectation of life and probably no sense of what might have been possible. Having machines work for us was clearly an incredible change, and AI may well be similarly transformative.

There is a very significant risk that AI will change our lives to be worse.

Having established that advanced AI may come soon and is likely to change our lives, the book then makes a convincing argument that we shouldn’t assume that the changes from AI will be positive.

Many if not most of those who work on AI are worried about how it could harm us. Incredibly, before becoming CEO of OpenAI, Sam Altman once said, “AI will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies.” To my knowledge, this is the first time at the forefront of developing a new technology has stated that the same technology will probably end the world! "Stranger than fiction" seems an appropriate term here.

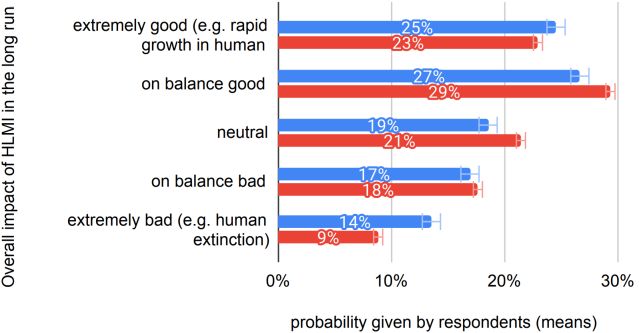

Many experts are clearly worried. In a study from 2022, nearly half of the AI experts surveyed predicted at least a 10 percent chance that the overall impact of advanced AI systems on humanity will be “extremely bad (e.g., human extinction).” As shown below, a more recent follow-up survey found that expert researchers believed that there was a 14 percent chance that future AI advances cause human extinction or similarly permanent and severe disempowerment.

McKee accepts that AI can and will have good uses and that AI may turn out to be good. But he argues that that’s not really the point. He makes a convincing argument that even if we cannot know the future, the balance of current evidence suggests that we should be prudent in evaluating and preparing for advanced AI. At the very least, we should all be thinking carefully about what is happening. More realistically, we should also be taking precautions.

Who needs to do what differently?

McKee outlines several changes that we can make as a society and as individuals.

As a society, we need to take steps to ensure that AI is developed in a safe and responsible manner. To this effect, McKee proposes eight related proposals for safe AI innovation:

- Establish liability for AI-caused harm.

- Require evaluation of powerful AI systems.

- Regulate access to computer power and resources.

- Require enhanced security: cyber, physical, and personnel.

- Invest in AI safety research.

- Create dedicated national and international governance agencies and organizations.

- Require a license to develop advanced AI models.

- Require labeling of AI content.

McKee also suggests a range of opportunities for individuals:

- Political advocacy: Contacting government officials about their plans for managing AI and demanding realistic and comprehensive plans.

- Media advocacy: Sharing informative and accurate AI safety content, supporting creators of good content, and requesting more accurate information from sources spreading misinformation.

- Careers in AI safety: Seeking employment in AI safety, utilizing guidance from platforms like 80,000 Hours, and bringing relevant skills to the field.

- Donations: Financially supporting AI safety organizations and encouraging those with the means to contribute toward AI safety initiatives.

- Volunteering: Offering time and skills to AI safety projects, gaining valuable experience, and contributing to the field.

- Join networks: Connecting with AI safety communities, participating in discussions, and staying informed about the latest in AI safety.

- Stay informed: Utilizing podcasts, YouTube videos, newsletters, and forums to keep abreast of developments and insights in AI safety.

Follow MIT FutureTech if you are interested in understanding the drivers of progress in computation and AI and the related social implications.

References

Darren McKee, Uncontrollable: The Threat of Artificial Superintelligence and the Race to Save the World. 2023.

Louis Anslow. In 1903, New York Times predicted that airplanes would take 10 million years to develop. Big Think. April 2022.

Jesse Galef. Sam Altman Investing in ‘AI Safety Research.’ Future of Life Institute. June 6, 2015.