Bias

Is Bias in AI Necessarily a Problem?

It likely depends on the situation.

Posted February 5, 2024 Reviewed by Michelle Quirk

Key points

- Bias in AI is not inherently negative, and there is a distinction between bias and accuracy.

- AI biases often stem from the training data or the design of the algorithm.

- The issue of bias and accuracy in AI underscores the need for balancing accuracy with ethical considerations.

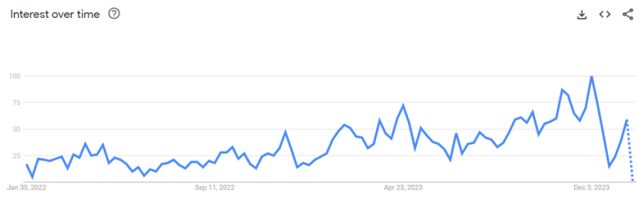

As artificial intelligence (AI) usage has grown, the potential of bias in AI has gained more attention, as evidenced by Google Trends data (Figure 1). A recent study1 by Herrera-Berg et al. (2023) examined the issue of bias, focusing specifically on the tendency of large language models (LLMs), like ChatGPT or Llama, to “overestimate profoundness.”

To briefly summarize, it extended a study by Pennycook et al. (2015) into the domain of AI, comparing various LLMs using different prompts to rate the profoundness of mundane, profound,2 and pseudoprofound statements against human ratings. The LLMs demonstrated a general bias toward profoundness, producing ratings for all three types of statements that were higher than those produced by humans.3 Notably, AIs were no better at discriminating between pseudoprofound and profound statements than humans.

But that caused me to ponder the following:

- Is it necessarily problematic for an AI’s results to be biased?

- How does bias relate to accuracy?

Before I dive more deeply into answering these two questions, a quick revisitation of what bias is and isn’t is warranted. So, let’s start there.

Bias—What It Is and What It Isn’t

If you look around the web, you’ll find lots of discussion of bias as it relates to AI. One particular example comes from Dimegani (2023), who wrote that “AI bias is an anomaly in the output of machine learning algorithms, due to the prejudiced assumptions made during the algorithm development process or prejudices in the training data.” This is representative of the modern view of bias, which treats bias strictly as a source of error.

But as I argued back in 2020, biases serve as nothing but a default tendency or predisposition. It’s a prediction that, when we lack sufficient information about a situation to make a more informed decision, still allows us to make a decision in that situation. The conclusion I argued there was that “biases are quite adaptive when (1) they do not meaningfully impact decision quality or (2) when there is evidence to support them.” It’s only when biases “cause us to rely on faulty information to reach a conclusion or…do not generalize to a given situation” that they lead to error.

Problematizing Bias in AI

The idea that biases necessarily lead to error is not an accurate conclusion.4 So, in that regard, the answer is no. It isn’t necessarily problematic for an AI’s results to be biased. For example, we want an AI’s results to be biased toward qualified job candidates and against unqualified ones. We want an AI that is designed to aid in health care diagnostics to be biased toward higher-quality than lower-quality evidence.

Now, when discussions occur about bias in AI, most of those really use the term bias to mean “demographic-based” differences in outcomes. If differences are present, then the assumption appears to be that the AI has favored a particular group over others or has made more errors for one group than others.

But there’s a difference between (1) an AI producing valid results where groups simply end up differing and (2) an AI using demographic data inappropriately5 to produce those outcomes. If the data being used to produce the outcomes are appropriate, valid criteria for predicting those outcomes, then resulting group differences would be biased toward those criteria, not toward or against a given group. So, in this instance, it isn’t a case of bias or error.6

On the flip side, there are times when “demographic-based” differences in outcomes are more akin to error, and this error is likely a function of bias. For example, we don’t want an AI to ignore relevant sex- or race-based factors that might improve prediction in medical diagnostic decisions. And we certainly don’t want to see the extremely high error rates we’ve seen for various facial types in the domain of facial recognition (Farrell, 2023). Some of these issues are based on the limitations in the training data, producing a tendency to rely on data most predictive in identifying those faces included. In the case of facial recognition, too many light-skinned, European faces in the data set leads to the AI seeking to maximize performance in recognizing those faces, which then makes it less effective at recognizing faces outside that group.

All this leads to the following conclusions. No, bias is not automatically a problem. We want our AI to be biased toward higher accuracy in the outcomes it produces. But we also want to ensure that we're not (1) misusing demographic data to put people in different demographic groups on unequal footing or (2) failing to adequately use appropriate demographic data when it is relevant to the accuracy of an outcome for people in those groups.

Bias and Accuracy

In circling back to the study by Herrera et al. (2023), their use of the term bias was more aligned with the definition of bias I discussed back in 2020. They observed that AI systems consistently rated the profoundness of statements higher than human evaluators. This phenomenon, where the AI's ratings diverge from the human benchmark, exemplifies a bias in the context of AI assessment.

However, this leads to a pivotal distinction in discussions about AI: the difference between bias and accuracy. The presence of bias does not inherently imply a lack of accuracy, even though modern discussions of issues like the bias-variance tradeoff treat bias as if it is synonymous with systematic inaccuracy. In the study by Herrera et al., while AI showed a tendency to favor profoundness, labeling this as less accurate requires a universally accepted standard of profoundness, which is inherently subjective. So, yes, there was a bias on the part of the AI. But, no, there was no evidence the bias affected accuracy because, in this case, there is no standard for accuracy.

The issue becomes more nuanced when we consider scenarios like job selection processes, where there is a clear standard for accuracy (i.e., we can test to see how valid our predictions are). Suppose an AI system tends to select more men than women for a particular job. This pattern could indicate a gender bias, but it doesn't automatically mean the selections are inaccurate.7 If the applicant pool is predominantly male or if more men in the pool meet the job criteria, the AI's decision, while seemingly biased, might still be accurate in terms of matching qualifications with job requirements.

Understanding the source of bias is crucial, whether it's in assessing profoundness or in job selection. Does it stem from the AI's training data, which might have had a higher representation of profound statements or male candidates, is it an intrinsic aspect of the algorithm's design, or are there valid reasons for why the AI should have produced what appears to be biased results? This analysis not only helps in pinpointing the cause; it also helps in determining whether the bias adversely affects accuracy and, if so, how to mitigate it.

In considering AI accuracy, we must also ponder the standards we set. What are the benchmarks for an AI's "accurate" judgment, especially in subjective domains or in contexts like job selection where societal norms and equity considerations play a significant role? The debate over these standards is not just academic but also has practical implications in diverse AI applications, from literary analysis to human resources.

Wrapping Things Up

The exploration of bias and accuracy in AI, as highlighted through studies like Herrera et al. (2023) and practical scenarios such as AI in job selection, underscores a critical reality: Bias in AI is not inherently indicative of error or inaccuracy. Rather, it's a prompt to examine the nuances of AI systems—their data, algorithms, and application contexts. This nuanced understanding is vital, especially when discerning between biases that uphold valid criteria and those that inadvertently perpetuate demographic disparities. As AI continues to evolve, the challenge lies in developing and adhering to nuanced standards that balance accuracy with ethical considerations, ensuring AI's role as a tool for effective decision-making.

References

1. Thanks to Rolf Degen for bringing this to my attention on Twitter.

2. Operationalized as legitimate motivational statements.

3. There were some idiosyncratic exceptions; please read the article for more information about those.

4. Yet it still pervades so much modern discourse.

5. We should be confining our AI to job-specific qualifications, and, except in rare circumstances, those do not include demographics.

6. A counterargument here is that different criteria could lead to just as valid a prediction without producing as many group differences. This is an argument that has some merit in some situations but is certainly not applicable in every instance.

7. Unless, of course, there’s an unstated goal to select equal numbers of candidates from different groups. But this goal could itself be inconsistent with selection based on job-specific hiring criteria.

Cem Dilmegani. Bias in AI: What it is, Types, Examples & 6 Ways to Fix it in 2024. AI Multiple.

Dominique Farrell. Facial recognition software in airports raises privacy and racial bias concerns. GBH. June 16, 2023