Heuristics

The War on Experts

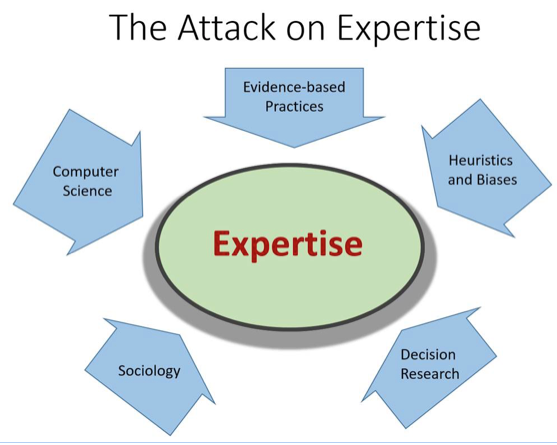

Five professional communities are trying to discredit expertise.

Posted September 6, 2017

There is an intentional effort to reduce our confidence in experts and to cast doubt on expertise itself. This effort, in many ways, feels like a war waged for intellectual turf, scientific credibility, political and even economic gain.

However, my view is that most of the claims of these expertise-deniers are misleading, and that the arguments tend to be over-statements. But these claims and arguments cannot simply be ignored because they are having some effect. Therefore, I want to rebut the misleading claims and the overstated arguments. My colleagues and I have prepared several rebuttals: a chapter that is also titled “The War on Experts” (Klein et al., in press), and a short article (Klein et al., 2017) based on this chapter. I will also be giving a presentation on the War on Experts in a panel at the Human Factors and Ergonomics meeting in October, 2017 in Austin Texas.

In this essay I want to briefly summarize the major themes in the chapter and the article.

The five communities engaged in this war on experts are: Decision Research, Heuristics & Biases (HB), Sociology, Evidence-Based Performance, and Information Technology.

Decision research. The primary studies conducted by this community have shown that statistical models outperform experts. Yet what is often forgotten is that the variables in the formulas were originally derived from the advice of experts. The primary advantage of the formulas is that they are consistent. However, the formulas tend to be brittle — when they fail, they fail miserably. And the experiments tend to be carefully controlled, avoiding the messy conditions that experts have to contend with, such as ill-defined goals, shifting conditions, high stakes, ambiguity about the nature and reliability of the data. Further, the research usually focuses on single measures, and ignores aspects of performance that are ambiguous and difficult to quantify. Finally, the advantages of the statistical methods tend to be found in noisy and complex situations in which the outputs are not very accurate, even when they are somewhat better than the expert judgments.

Heuristics and Biases (HB). Kahneman & Tversky (Tversky & Kahneman, 1974; Kahneman, 2011) showed that people, even experts, fall victim to judgment biases. However, most of the HB research is with college students performing artificial, unfamiliar tasks, with no context to guide them. When researchers use a meaningful context, the judgment biases usually diminish. And besides, the heuristics are usually helpful, as Kahneman and Tversky themselves pointed out.

Sociology. Members of this community assert that expertise is a function of the community and of the artifacts surrounding the task, referring to “situated cognition” and “distributed cognition.” The expertise-deniers argue that expert cognition is socially constructed, and is not a function of individual knowledge. Clearly, team and situational factors play a role in expert performance, but this extreme position seems untenable — replace the experts on a team with journeymen, and see how the overall performance suffers.

Evidence-Based Performance. The idea here is that professionals, such as physicians, should base their diagnoses and remedies on scientific evidence instead of relying on their own judgments. Obviously, too many quack treatments and unjustified superstitions achieved popularity, and controlled experiments have helped weed these out. However, scientifically validated best practices aren’t a replacement for skilled judgment, which is needed for gauging confidence in the evidence, revising plans that don’t seem to be working, and applying simple rules to complex situations. In medicine, patients often present several conditions at the same time, whereas the evidence usually pertains to one condition or another.

Information Technology. Artificial Intelligence, Automation, and Big Data have each claimed to be able to replace experts. However, each of these claims is unwarranted. Let’s start with AI. Smart systems should be able to do things like weather forecasting better (and more cheaply) than humans, but the statistics show that human forecasters improve the machine predictions by about 25%, an effect that has stayed constant over time. AI successes have been in games like chess, Go, and Jeopardy — games that are well-structured, with unambiguous referents and definitive correct solutions. But decision makers face wicked problems with unclear goals in ambiguous and dynamic situations, conditions that are beyond AI systems. As Ben Shneiderman and I observed in a previous essay, humans are capable of frontier thinking, social engagement, and responsibility for actions. Next, we look at automation, which is supposed to save money by reducing jobs. However, case studies show that automation typically depends on having more experts, to design the systems and to keep them updated and running. Further, automation is often poorly designed and creates new kinds of cognitive work for operators. Last, Big Data approaches can search through far more records and sensor inputs than any human, but these algorithms are susceptible to seeing patterns where none really exist. Google’s FluTrends project was publicized as a success story, but subsequently failed so badly that it was removed from use. Big Data algorithms follow historical trends, but may miss departures from these trends. Further, experts can use their expectancies to spot missing events that may be very important, but Big Data approaches are unaware of the absence of data and events.

Therefore, none of these communities poses a legitimate threat to expertise. Left unchallenged, the overstatements and confusions that lie behind these claims can lead to a downward spiral in which experts are dismissed. Of course, we need to learn from the critiques of each of these communities. We need to appreciate their contributions and capabilities, in order to move beyond the adversarial stance taken by each community. Ideally, we will be able to foster a spirit of collaboration in which their positive findings and techniques can be used to strengthen the work of experts.

References

Kahneman, D. (2011). Thinking fast and slow. New York: Farrar, Straus & Giroux.

Tversky, A. and Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases, Science, 185, 1124–1131.

Klein, G., Shneiderman, B., Hoffman, R.R., & Wears, R.L. (in press). The war on expertise: Five communities that seek to discredit experts. In P. Ward, J.M. Schraagen, J. Gore & E. Roth (Eds.). Oxford, UK: The Oxford Handbook of Expertise, Oxford, UK: Oxford University Press.

Klein, G., Shneiderman, B., Hoffman, R.R., & Ford, K.M. (in press). Why expertise matter: A response to the challenges. IEEE: Intelligent Systems.