Artificial Intelligence

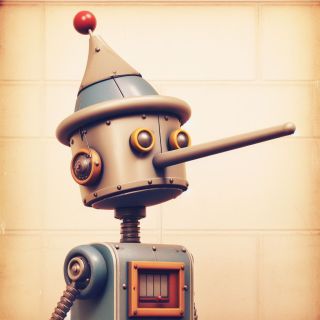

Why AI Lies

For chatbots, the truth is elusive; it is for humans, too.

Posted October 27, 2023 Reviewed by Gary Drevitch

Key points

- An attorney got in trouble using ChatGPT to outsource legal research.

- AI is trained on fiction and fake news, but there’s no simple fix for errors and “hallucinations.”

- Chatbots are trained to imitate human speech patterns, including lies.

Attorney Steven Schwartz heard about large language models (LLMs) from his children. He read a few articles on the subject, one saying that the new artificial intelligence (AI) chatbots could make legal research obsolete.

Schwartz asked OpenAI’s ChatGPT to help him with the research for a lawsuit; he was representing an airline passenger who suffered injuries after being struck by a serving cart on a 2019 flight.

ChatGPT instantly summarized comparable cases, such as Martinez v. Delta Air Lines and Varghese v. China Southern Airlines. Schwartz put them in his filing. Unfortunately for Schwartz, many cases—and even some airlines—didn’t exist

The defendant’s lawyers complained that they could not locate the cases cited. At first, Schwartz and another attorney at his firm gave “shifting and contradictory explanations.” Then Schwartz told the judge what had happened.

The story went viral, combining as it did two of our culture’s alpha villains (lawyers and AI). As Schwartz’s legal firm told Judge P. Kevin Castel, they had “become the poster child for the perils of dabbling with new technology," according to Forbes.

Unimpressed, Judge Castel fined Schwartz and his colleague $5,000 for a filing he called “gibberish.”

Why do LLMs often spin convincing but bogus answers (a.k.a. "hallucinations")? The short answer is that LLMs have no innate conception of truth or falsehood. They use a purely statistical model of human language created from news stories, blog posts, ebooks, and other human-created text online. This statistical model allows LLMs to guess the next word after a prompt and to do so repeatedly to generate an answer or an essay.

LLMs are trained in fiction, nonfiction, fake news, and serious journalism. You might wonder, why don’t they restrict the training material to fact-checked nonfiction?

Well, the L in LLM stands for large. The models’ power depends on training with massive quantities of text, as in trillions of words. It would be impractical to fact-check everything.

Even well-researched nonfiction can be false. A 1988 newspaper article says that Ronald Reagan is president and Pluto is a planet. What was true then may not be true now.

True or false: I drive an orange Lamborghini. It depends on who’s speaking. The problem is not just pronouns. True or false:

The dog ate my homework.

The check is in the mail.

These are cliché lies, but not always. Sometimes, the dog does eat your homework.

Many are surprised to learn that LLMs also make math errors. Microsoft researcher Sébastien Bubeck posed this problem to a prerelease version of OpenAI’s GPT-4.

7*4+8*8=

The chatbot’s answer was 120. The correct answer is 92.

To be open-minded, I’ll admit that the answer depends on the somewhat arbitrary convention that the multiplications are done before the addition.

GPT-4’s neural network was indeed trained on that convention, if only because of the ubiquitous genre of clickbait posts daring readers to show their skills with similar calculations. But the 120 answer is wrong by any order of calculation.

Why are LLMs bad at math? In essence, it’s because the successive numbers in a mathematical expression are harder to predict than words in a sentence. There is an infinity of numbers transcending any neural network's power.

Curious, Bubeck asked GPT-4 to show its work. The chatbot explained the calculation in detail, ending up with the correct answer of 92. When Bubeck reminded the bot that it had initially said 120, it replied,

That was a typo, sorry. The correct answer is 92, as shown in the solution.

A typo. Humans have clumsy fingers and fragile egos (to protect themselves with white lies). A body of cognitive science research suggests that we confabulate a running narrative in which our actions are more rational, admirable, and consistent than they are.

Rather than owning up to its inconsistency, GPT-4 was gaslighting its audience. Chatbots do not have egos, but they imitate humans who do.

References

Bubeck, Sébastien (2023). "Sparks of AGI: Early Experiments with GPT-4." Talk given at MIT, Mar. 22, 2023. youtu.be/qbIk7-JPB2c?si=hWs5oAEpReIxAJm5