Replication Crisis

The Research Literature Looks Too Good to Be True

A new way of publishing academic research challenges the past literature.

Posted February 10, 2020 Reviewed by Lybi Ma

When I was a kid, I watched Sportscenter almost every morning before school. This show recapped the biggest stories and games in US sports each day. Although I never played basketball, I loved watching the NBA highlights. Sportscenter would summarize the games and show you all of the best shots and dunks.

One day, they showed statistics about the best shooters in the league. I was really surprised to learn that the best 3-point shooters made about 1 out of every 3 shots they took. How could that be? From the clips I saw, I didn’t realize they ever missed a shot. The problem with my reasoning, of course, is that highlight shows don’t show you the missed shots. Why would they? It’s much more exciting to see a player sink a 3-pointer than it is to watch them miss. However, if you want to truly know who is the best shooter, you can’t just count the shots they make; you need to know how many they’ve missed too.

Science might have a similar problem. Researchers tend to share the results of their scientific studies in academic journals. If you thought these journals were reflective of all the science that’s being done, you’d probably think of researchers the same way I thought of basketball players: they never miss. The predictions described in scientific papers are overwhelmingly likely to be supported by the data.

It’s possible that this view of science is generally representative of the research that’s been conducted. If that were the case, it would suggest that scientists are very good at making predictions. That is, our hypotheses about the world are very likely to be true. It would also suggest that we’re very good at running studies. That is, we create experiments that are excellent at measuring whatever we’re studying and we always collect enough data to reliably confirm our hypotheses.

However, it’s also possible that academic journals are the Sportscenter of science. That is, they just show you all the best shots and dunks, not the misses. This would present a problem for understanding the reliability of research. If we only see when studies “work” (that is, find support for the hypotheses), we won’t learn when they don’t work.

This is the central problem examined in a new paper by Anne Scheel, Mitchell Schijen, and Daniël Lakens. In it, they looked at how frequently research articles reported that the data confirmed their hypotheses. For a comparison group, they examined a new type of research article: Registered Reports. Registered Reports flip the order of academic publishing and carry out peer review before the research is conducted. That is, rather than evaluating researchers on what they did (and what they found), in Registered Reports, researchers are evaluated on what they plan to do (and what they hope to find).

If the plan of research is sound and worthwhile, the Registered Report is given in principle acceptance prior to the research being conducted. As long as researchers conduct the study that was approved, their results will get published regardless of what they find. As a result, this prevents both researchers and editors from cherry picking the best results for publication.

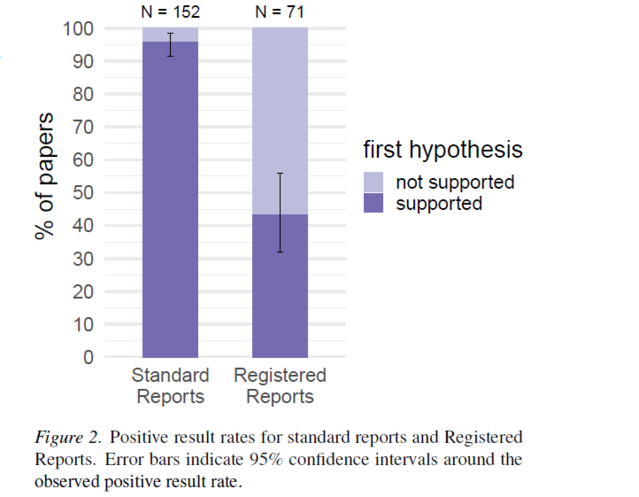

How do standard academic articles compare to Registered Reports? It seems they paint a much rosier picture of the research landscape than may be warranted. In their sample of standard articles, the first hypothesis reported was supported by the data 96% of the time. In their sample of Registered Reports, that rate was only 44%.

What might be causing this difference? There are a couple of possible (and not mutually exclusive) explanations. First, it might be the case that the hypotheses proposed in Registered Reports are much less likely to be true than those investigated in standard articles. This would mean that researchers are submitting much riskier hypotheses to Registered Reports than they would investigate for a standard journal submission. Though possible, this seems somewhat unlikely given the Registered Report pipeline. Part of the pre-study peer review is assessing the soundness of the research question, and I suspect peer reviewers may look more negatively on bonkers research questions (bonkers being a very technical term for "unlikely to be true").

A second explanation is that researchers and journal editors are treating standard articles like a highlight reel for science. Researchers who find negative results in a given study (that is, results that do not support their hypotheses) may choose to not submit those results for publication. Similarly, journal editors may be less interested in publishing studies with negative results in their journals.

This phenomenon (often referred to as publication bias) would create a distorted view of the scientific record. Researchers have been warning about this distortion for decades (e.g., Sterling, 1959; Greenwald, 1975; Rosenthal, 1979) and this comparison with Registered Reports seems to support those fears.

Overall, I think this paper demonstrates that the research literature is a bit too much like Sportscenter. It privileges positive results at the expense of negative results. To have a more complete and accurate account of science, we need to know when studies don’t work, not just when they do.

However, I also think the results of this paper are pretty encouraging for researchers. Even when we combat publication bias (through the Registered Reports process), we still find positive results 44% of the time. I think that’s pretty good. As a point of comparison, Steph Curry, arguably the best 3-point shooter in the NBA right now (if not all time) makes about 44% of his 3-pointers. Scientists might not be right 96% of the time in reality, but being the Steph Curry of testing hypotheses is an impressive achievement.

References

Greenwald, A. G. (1975). Consequences of prejudice against the null hypothesis. Psychological Bulletin, 82(1), 1.

Rosenthal, R. (1979). The file drawer problem and tolerance for null results. Psychological Bulletin, 86(3), 638.

Sterling, T. D. (1959). Publication decisions and their possible effects on inferences drawn from tests of significance—or vice versa. Journal of the American Statistical Association, 54(285), 30-34.