How pathetically scanty my self-knowledge is compared with, say, my knowledge of my room. There is no such thing as observation of the inner world, as there is of the outer world.

How pathetically scanty my self-knowledge is compared with, say, my knowledge of my room. There is no such thing as observation of the inner world, as there is of the outer world.

~ Kafka

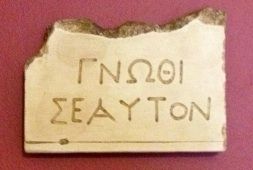

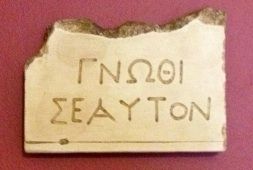

Social psychologists and other mortals are hypnotized by the Delphic demand to Know thyself. Actually, it was not the oracle speaking, but probably a sophist prince who put these words on the front of the temple of Apollo. He did not ask that we run fast, lose weight, or win battles. He asked that we know ourselves. Why? What did he care? Perhaps the sophist prince thought that if we knew ourselves, society would be in better shape [which raises the question of how a ruler might want such a state of affairs]. Alternatively, he was a regal prankster, who knew us (and himself) well enough to realize that self-knowledge is hard. After demanding the impossible, he could sit back and enjoy the spectacle of us blundering through our self-related illusions and fallacies. There is something Socratic about this attitude, and many people (falsely) believe that the master of ignorance himself carved the Delphic imperative into stone.

Social psychology supports a cottage industry dedicated to the case of human ignorance. Giving a nod to Kafka’s pessimism, an ever-flowing stream of studies reveals our lack of self-insight and our systematic straying from the truth (see here for an earlier post on the matter). Meanwhile, however, evidence for accuracy has also been piling up. There are now 22 meta-analysis on the accuracy of self-knowledge, each aggregating the findings of many (though overlapping sets of) individual studies. Zell & Krizan (2014) integrated these meta-analytic results in what they call a metasynthesis, or a second-order meta-analysis. The grand average effect size is a correlation of .29. The correlation coefficient r is a common metric to represent the size of an effect or the degree to which variance in the observed data is systematic and thus predictable. A positive correlation means that as actual ability (competence, performance, test scores) go up, so do people’s estimates of their scores.

What to make of a correlation of .3? This is where mathematical statistics ends and interpretation begins. Often, the subjectivity of this endeavor cloaks itself in the mantle of scientific objectivity. It may be pointed out, for example, that a correlation of .3 is significantly larger than 0, or that all the individual effect sizes are positive. Conversely, no one proposes that a correlation be statistically compared with the limiting value of 1 (or -1); such a comparison is not possible because a correlation of 1 has no sampling distribution. Since meta-analysis is about the estimation of effect sizes, questions of significant difference are rarely posed anyway. How else then, can one make sense of a particular numerical value? Cohen (1992) suggested that values of .1, .3, and.5 respectively represent small, medium, and large effects. His suggestion has become accepted tradition. Notice that Cohen’s benchmarks are closer to 0 than to 1. Cohen must have realized that correlations greater than .5 are hard to come by in the social and behavioral sciences, and so he down-calibrated. Indeed, metasyntheses of the social-psychological literatures show that effects of .3 are the most common. The accuracy effect thus lies squarely within the expected range, much like the average correlation between attitudes and behavior or between personality traits and behavior.

Yet, many people think the accuracy correlation should be higher. They may point out that the proportion of explained variance, which is given by r square, is low. This is a bit of a mental trick. Even if correlation coefficients were equally distributed over the range between 0 and 1, their squared values would be radically right-skewed, thereby making the empirical record look dismal. Those expecting greater accuracy may also note that attitude-behavior correlations or personality-behavior correlations are not the right standard of comparison, but that reliability or validity correlations are. Such correlations need to exceed .7 to be respectable. Consider validity. A measurement or a measurement instrument is valid inasmuch as its numerical values are correlated with true values. Hence, people’s estimates of their own ability and test scores can be respectively regarded as measurements and criterion values. Now we can see that the analogy is misleading. The validity of a measurement instrument is assessed by correlating many measurements with criterion values. In the case of accuracy correlations, however, each person assesses only him- or herself, which means that each estimate comes from a different measurement instrument (i.e., person). Accuracy correlations would probably be higher if each individual estimated several of his or her abilities and if these estimates were correlated with test scores.

Zell & Krizan’s finding that the average accuracy correlation is .3 is interesting, but of little theoretical relevance. Extreme values of 0 (no accuracy) or 1 perfect accuracy) are unlikely anyway, although one might expect some positive, non-extreme value. Should this value be .5 instead of .3, no theoretical implications would follow. Moving forward, Zell & Krizan show that several structural variables affect accuracy. For example, accuracy is higher for specific than for diffuse abilities, and it is higher in familiar than in unfamiliar domains. These moderator effects are plausible, and do not require a sophisticated theory in order to be explained. Interestingly, these moderator effects are smaller than the grand effect (when compared with 0), a state of affairs that seems to puzzle no one. Moderator effects are respected whenever they are detectable; they are never compared with a maximum value they might take. Framed differently, we might conclude that the grand average accuracy effect is large because it is larger than the moderator effects that qualify it.

In sum, Zell & Krizan provide and interesting, if theoretically bland, overview and integration of the voluminous literature on the accuracy of self-perception. The sage of Delphi would be pleased. Humans seem to have just enough self-insight to be cocky enough to ignore his imperative, but not enough to realize how badly they need his advice.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155-159.

Zell, E., & Krizan, Z. (2014). Do people have insight into their abilities? A metasynthesis. Perspectives on Psychological Science, 9, 111-125.