Unless a reviewer has the courage to give you unqualified praise, I say ignore the bastard. –John Steinbeck on letting go of unwanted anchors

Heuristics are mental shortcuts that allow people to make quick decisions or solve problems with minimal mental effort. The heuristic of anchoring (and insufficient adjustment) forms part of a trinity of thinking tools, along with the representativeness heuristic and the availability heuristic. They allow us to make reasonably good judgments much of the time while consigning us to a state of predictable irrationality, according to Tversky and Kahneman (1974).

Incoherence and inaccuracy are the characteristics of irrationality. While many research studies demonstrate a bias, for example, as a correlation that is too high or a difference between two means that is too large, direct evidence for incoherence or inaccuracy is rare. Likewise, the question of how people should reason if not by heuristics is addressed in some studies but not in others. In this post, I will comment briefly on the availability heuristic, and then address the anchoring heuristic in more detail.

As may be recalled, the availability heuristic is about memory. When asked to estimate a quantity (e.g., a frequency or a ratio), people may base their judgments on the number of relevant instances they can recall and the ease with which they can recall them. This heuristic way of thinking yields good (i.e., accurate) results if memory performance is not also affected by factors unrelated to the actual quantity. To illustrate the research strategy that seeks to demonstrate the existence of a bias without offering clear lessons about (in)accuracy, consider a classic study by Schwarz et al. (1991). These researchers asked participants to recall instances where they had acted assertively, and they found that the ease with which respondents performed this memory task predicted their self-ratings of assertiveness. The ease of recall was experimentally manipulated, and thus, its effect revealed an availability bias (see Kwan et al., 2019, for a partial replication).

It remains probable that without this manipulation, assertive individuals will find it easier to recall assertive behaviors than will unassertive individuals. While the significant result of the experimental manipulation showed that a bias had been created, it did not show to what extent the accuracy of the self-ratings had suffered. One may speculate that on average, self-ratings had become less accurate because the experimental manipulation of ease of recall was independent of respondents’ true level of assertiveness. In other words, the addition of a bias B to a model of judgment J that otherwise comprises only Truth T and Random Error E increases the difference (i.e., the inaccuracy) between Judgment J and Truth T (i.e., the difference term of inaccuracy, J—T, is larger for J = T + B + E than for J = T + E). Here, bias devolves into error.

A similar situation arises in studies of the anchoring heuristic. This heuristic comprises a failure to ignore what must be ignored, that is, the irrelevant anchor (Krueger, 2020). A person estimating the population of Greenland should not offer a higher or lower number after respectively rejecting the claim that the island’s population is greater than 100 million or less than 100 persons. When, nonetheless, mean estimates are higher following a high than a low anchor, a bias is said to have occurred. The implication for accuracy at the level of individual judges is analogous to what we saw in the case of the availability heuristic. As a systematic bias is produced independently of actual values (Truth), this additional variation folds into the error term. With anchoring, the average of J—T is larger if J = T + B + E than if J = T + E.

The focus of research tends to be on individuals, where some are experimentally biased in one direction, while others are biased in the other direction. The result is a greater variance in the judgments and thus larger error on average. But there is another way. We can explore the implications of bias on the aggregated group judgment or we can submit each individual to both directional biases and study the result. Accuracy now becomes a question of crowd rationality.

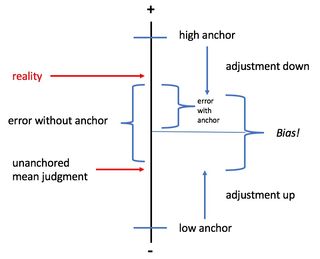

Consider display 1. The vertical line is the quantitative dimension of interest (e.g., the population of Greenland). A high and a low anchor are placed on this dimension, each with its own adjustment toward the center. The difference between the adjusted judgments is the bias. Somewhere along the line, we find the true value ("reality") and the average judgment made by those who had no anchor. The difference between these two values is the mean error in the absence of anchoring. Returning to the right side of the vertical line, we find the average of the post-anchor judgments represented by the thin horizontal line. The difference between its intersection with the vertical line and T (reality) is the average estimation error for the collective of both anchoring groups—or for individuals using both anchors. In this example, the collective error is smaller with than without anchors.

Reality T, the mean unanchored individual judgment uJ, and the mean anchored judgment aJ are moving parts. Letting all three (or one or two at a time) roam along the vertical dimension and inspecting the size of the two types of mean error reveals the following:

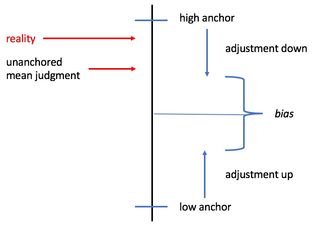

Anchoring increases (collective) accuracy if the true value and the mean unanchored estimate lie on different sides of the mean anchored estimates or if the true value and the mean unanchored estimate are both greater or are both smaller than the mean anchored estimate and the true value is closer to the mean anchored estimate than to the mean anchored estimate. The only way anchoring can decrease (collective) accuracy is for the true value and the mean unanchored estimate to be both greater or both smaller than the mean anchored estimate and the true value to lie closer to the mean unanchored than to the mean anchored estimate. Display 2 shows this scenario

Anchoring would likely decrease accuracy only if accuracy were high without anchoring, that is, when judges already possess good knowledge; when they do, they will find it easy to ignore the anchors and will thus produce only a small bias. Conversely, anchoring can increase accuracy when accuracy without anchors is low, that is, when judges have little valid knowledge. These judges, recognizing their uncertainty or ignorance, will find it easy to accept the anchors. They will realize a large accuracy gain if they then—as a wise crowd—average their judgments over the high- and low-anchor scenarios.

Judges who do not know whether their reliance on anchors will increase or decrease the accuracy of their estimates may want to bet on the former. As we have seen, there are more scenarios in which anchoring helps than there are scenarios where anchoring hurts. It must be remembered, however, that both anchors need to be considered before estimates are averaged.

We now see considering both anchors will produce some error while yielding good results most of the time, just like Tversky and Kahneman (1974) claimed.

References

Kwan, Y. C., Chu, M., DePaul, L., Gallego, M., Li, X., Kyler, N., Parikh, N., Stoermann, J., Stone, A., van de Goor, K., Wang, L., Zhao, S., & Pashler, H. (2019). An attempt to replicate Schwarz et al. (1991). https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3323491

Krueger, J. I. (2020). Available anchors and errors. Psychology Today Online. https://www.psychologytoday.com/us/blog/one-among-many/202004/available…

Schwarz, N., Bless, H., Strack, F., Klumpp, G., Rittenauer-Schatka, H., & Simons, A. (1991). Ease of retrieval as information: Another look at the availability heuristic. Journal of Personality and Social psychology, 61, 195-202.

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185, 1124-1131.